For the 17 percent of American adults—or 36 million people—who live with hearing loss, navigating daily life can be an exhausting gauntlet of lost conversations. Older adults with hearing loss in particular often find noisy situations overwhelming and may opt to avoid gatherings they once enjoyed, risking isolation and even cognitive decline.

Now, researchers from the Columbian College of Arts and Sciences (CCAS) Department of Speech, Language and Hearing Science are helping adults with hearing loss join the conversation through novel approaches to lipreading training. Combining knowledge from cognitive neuroscience with real-life lessons from their lipreading training sessions, a team of experts—Professor of Speech, Language, and Hearing Science Lynne E. Bernstein; Associate Research Professor Silvio P. Eberhardt; Associate Research Professor Edward T. Auer; and Clinical Coordinator of Audiology Nicole Jordan—are stressing lipreading as a vital component for improving audiovisual speech recognition amid noise.

Through a pair of National Institutes of Health-funded studies, the team is conducting remote lipreading training with more than 200 people with hearing loss, introducing innovative new strategies along with their own state-of-the-art training software.

Their approach emphasizes the relationship between seeing and hearing in communication, drawing in part from cognitive neuroscience studies led by Bernstein and Auer that track visual speech as a complex process across the visual, auditory and language processing areas of the brain. “It is now acknowledged more widely that there are two [speech processing] pathways—one through the ears and one through the eyes,” Bernstein said.

Still, lipreading presents significant challenges, not the least of which is that it can be extremely hard to learn. In fact, some experts still believe that lipreading can’t be taught—a claim the CCAS researchers reject. “Lipreading ability is not an inborn trait,” Bernstein said. Instead, the team’s findings suggest that properly-taught lipreading can help people with hearing loss—as well as people with normal hearing—use both listening and looking skills to bolster their ability to communicate.

“When good lipreading is combined with hearing—even when it is impaired—the results are typically better than the sum of hearing alone plus lipreading alone,” Bernstein said. “That is: 1 + 1 often equals a lot more than 2.”

Seeing the Talker

Given the sophistication of digital hearing aids and cochlear implants, the CCAS experts said researchers are often too quick to underestimate the benefits of looking at people when talking, a process that has become even more challenging with the prevalence of face masks during the pandemic. But even the highest functioning devices have limitations, especially in noisy settings. While the researchers said hearing aids can improve speech in noise by 2 or 3 decibels, some studies show that visual speech combined with hearing speech is functionally equivalent to 12 or more decibels of noise reduction.

“Hearing aids are just that: aids,” Jordan said. “In instances where the hearing aids alone are not enough, lipreading can help bridge the gap between hearing and understanding.” And, the researchers maintained, the pandemic has only heightened the importance of visible speech. “Now, more than ever, people are realizing that they rely on lipreading and facial expressions more than they realized,” Jordan said.

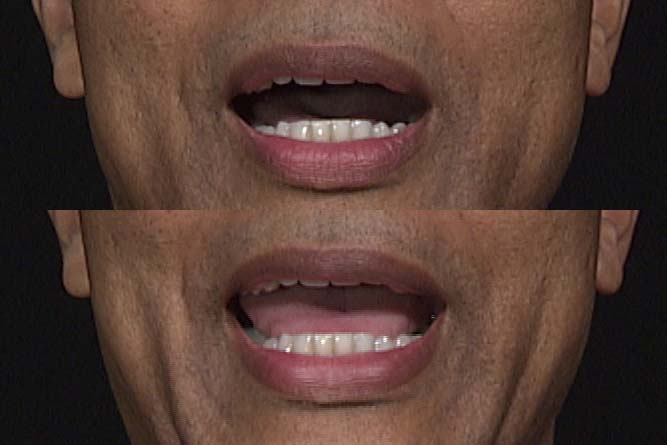

Observing a person’s face can offer an array of communication information from social cues to speech signals. But while good listeners may be adept at making eye contact, effective lipreading requires being carefully attuned to the lower part of the face, a tactic that improves with effective lipreading training. “The first thing that happens is [lipreaders] learn how to look—in the sense that instead of paying attention to the talkers’ eyes, they start looking at their mouths,” Bernstein said.

At the same time, the researchers point to decades of flawed training methods, which often overemphasize guessing or place too much focus on sounding out individual letters rather than recognizing full words. “We don’t want somebody looking at mouths and saying, ‘Well, the tongue is between the teeth. I think that’s a T-H sound,’” Auer said. “You’ll never be able to do that fast enough to actually perceive speech.”

Instead, the team’s training uses software to analyze errors and give feedback based on the words the lipreader actually thought the talker said—the “near miss” errors that sound far off but may be surprisingly close to correct. For example, a lipreader may see the sentence, “Proof read your final results,” and think the talker said, “Blue fish are funny.” The software algorithm would note the near misses, such as the “r” and “l” in “proof” and “blue” which look similar but are actually visually different.

The team’s other recommendations include tailoring training to different ability levels and varying sample-talkers to showcase numerous vocal tracts and dialects. Just as important, they noted, is acknowledging that learning to effectively lipread is a long-term process but one that can ultimately succeed in the right conditions.

“We shouldn’t expect anyone to improve their lipreading overnight,” Bernstein said. “But our studies show that, with good training, people keep improving. We’ve never seen anyone top out.”